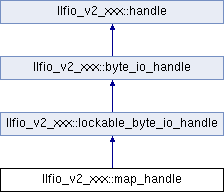

A handle to a memory mapped region of memory, either backed by the system page file or by a section. More...

#include "map_handle.hpp"

Classes | |

| struct | cache_statistics |

| Statistics about the map handle cache. More... | |

Public Types | |

| enum class | memory_accounting_kind { unknown , commit_charge , over_commit } |

| The kind of memory accounting this system uses. More... | |

| using | extent_type = byte_io_handle::extent_type |

| using | size_type = byte_io_handle::size_type |

| using | mode = byte_io_handle::mode |

| using | creation = byte_io_handle::creation |

| using | caching = byte_io_handle::caching |

| using | flag = byte_io_handle::flag |

| using | buffer_type = byte_io_handle::buffer_type |

| using | const_buffer_type = byte_io_handle::const_buffer_type |

| using | buffers_type = byte_io_handle::buffers_type |

| using | const_buffers_type = byte_io_handle::const_buffers_type |

| template<class T > | |

| using | io_request = byte_io_handle::io_request< T > |

| template<class T > | |

| using | io_result = byte_io_handle::io_result< T > |

| using | path_type = byte_io_handle::path_type |

| enum class | barrier_kind : uint8_t { nowait_view_only , wait_view_only , nowait_data_only , wait_data_only , nowait_all , wait_all } |

| The kinds of write reordering barrier which can be performed. More... | |

| using | registered_buffer_type = std::shared_ptr< _registered_buffer_type > |

| The registered buffer type used by this handle. | |

Public Member Functions | |

| constexpr | map_handle () |

| Default constructor. | |

| map_handle (byte *addr, size_type length, size_type pagesize, section_handle::flag flags, section_handle *section=nullptr, extent_type offset=0) noexcept | |

Construct an instance managing pages at addr, length, pagesize and flags | |

| constexpr | map_handle (map_handle &&o) noexcept |

| Implicit move construction of map_handle permitted. | |

| map_handle (const map_handle &)=delete | |

No copy construction (use clone()) | |

| map_handle & | operator= (map_handle &&o) noexcept |

| Move assignment of map_handle permitted. | |

| map_handle & | operator= (const map_handle &)=delete |

| No copy assignment. | |

| void | swap (map_handle &o) noexcept |

| Swap with another instance. | |

| virtual result< void > | close () noexcept override |

| Unmap the mapped view. | |

| virtual native_handle_type | release () noexcept override |

| Releases the mapped view, but does NOT release the native handle. | |

| section_handle * | section () const noexcept |

| The memory section this handle is using. | |

| void | set_section (section_handle *s) noexcept |

| Sets the memory section this handle is using. | |

| byte * | address () const noexcept |

| The address in memory where this mapped view resides. | |

| extent_type | offset () const noexcept |

| The offset of the memory map. | |

| size_type | capacity () const noexcept |

| The reservation size of the memory map. | |

| size_type | length () const noexcept |

| The size of the memory map. This is the accessible size, NOT the reservation size. | |

| span< byte > | as_span () noexcept |

| The memory map as a span of bytes. | |

| span< const byte > | as_span () const noexcept |

| This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts. | |

| size_type | page_size () const noexcept |

| The page size used by the map, in bytes. | |

| bool | is_nvram () const noexcept |

| True if the map is of non-volatile RAM. | |

| result< size_type > | update_map () noexcept |

| Update the size of the memory map to that of any backing section, up to the reservation limit. | |

| result< size_type > | truncate (size_type newsize, bool permit_relocation) noexcept |

| result< buffer_type > | commit (buffer_type region, section_handle::flag flag=section_handle::flag::readwrite) noexcept |

| result< buffer_type > | decommit (buffer_type region) noexcept |

| result< void > | zero_memory (buffer_type region) noexcept |

| result< buffer_type > | do_not_store (buffer_type region) noexcept |

| io_result< buffers_type > | read (io_request< buffers_type > reqs, deadline d=deadline()) noexcept |

| Read data from the open handle, preferentially using any i/o multiplexer set over the virtually overridable per-class implementation. | |

| io_result< size_type > | read (extent_type offset, std::initializer_list< buffer_type > lst, deadline d=deadline()) noexcept |

| This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts. | |

| io_result< const_buffers_type > | write (io_request< const_buffers_type > reqs, deadline d=deadline()) noexcept |

| Write data to the open handle, preferentially using any i/o multiplexer set over the virtually overridable per-class implementation. | |

| io_result< size_type > | write (extent_type offset, std::initializer_list< const_buffer_type > lst, deadline d=deadline()) noexcept |

| This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts. | |

| virtual result< void > | lock_file () noexcept |

| Locks the inode referred to by the open handle for exclusive access. | |

| virtual bool | try_lock_file () noexcept |

Tries to lock the inode referred to by the open handle for exclusive access, returning false if lock is currently unavailable. | |

| virtual void | unlock_file () noexcept |

| Unlocks a previously acquired exclusive lock. | |

| virtual result< void > | lock_file_shared () noexcept |

| Locks the inode referred to by the open handle for shared access. | |

| virtual bool | try_lock_file_shared () noexcept |

Tries to lock the inode referred to by the open handle for shared access, returning false if lock is currently unavailable. | |

| virtual void | unlock_file_shared () noexcept |

| Unlocks a previously acquired shared lock. | |

| virtual result< extent_guard > | lock_file_range (extent_type offset, extent_type bytes, lock_kind kind, deadline d=deadline()) noexcept |

EXTENSION: Tries to lock the range of bytes specified for shared or exclusive access. Note that this may, or MAY NOT, observe whole file locks placed with lock(), lock_shared() etc. | |

| result< extent_guard > | lock_file_range (io_request< buffers_type > reqs, deadline d=deadline()) noexcept |

| This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts. | |

| result< extent_guard > | lock_file_range (io_request< const_buffers_type > reqs, deadline d=deadline()) noexcept |

| This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts. | |

| template<class... Args> | |

| bool | try_lock_file_range (Args &&... args) noexcept |

| template<class... Args, class Rep , class Period > | |

| bool | try_lock_file_range_for (Args &&... args, const std::chrono::duration< Rep, Period > &duration) noexcept |

| template<class... Args, class Clock , class Duration > | |

| bool | try_lock_file_range_until (Args &&... args, const std::chrono::time_point< Clock, Duration > &timeout) noexcept |

| virtual void | unlock_file_range (extent_type offset, extent_type bytes) noexcept |

| EXTENSION: Unlocks a byte range previously locked. | |

| size_t | max_buffers () const noexcept |

The maximum number of buffers which a single read or write syscall can (atomically) process at a time for this specific open handle. On POSIX, this is known as IOV_MAX. Preferentially uses any i/o multiplexer set over the virtually overridable per-class implementation. | |

| io_result< buffers_type > | read (io_request< buffers_type > reqs, deadline d=deadline()) noexcept |

| Read data from the open handle, preferentially using any i/o multiplexer set over the virtually overridable per-class implementation. | |

| template<class... Args> | |

| bool | try_read (Args &&... args) noexcept |

| template<class... Args, class Rep , class Period > | |

| bool | try_read_for (Args &&... args, const std::chrono::duration< Rep, Period > &duration) noexcept |

| template<class... Args, class Clock , class Duration > | |

| bool | try_read_until (Args &&... args, const std::chrono::time_point< Clock, Duration > &timeout) noexcept |

| io_result< const_buffers_type > | write (io_request< const_buffers_type > reqs, deadline d=deadline()) noexcept |

| Write data to the open handle, preferentially using any i/o multiplexer set over the virtually overridable per-class implementation. | |

| template<class... Args> | |

| bool | try_write (Args &&... args) noexcept |

| template<class... Args, class Rep , class Period > | |

| bool | try_write_for (Args &&... args, const std::chrono::duration< Rep, Period > &duration) noexcept |

| template<class... Args, class Clock , class Duration > | |

| bool | try_write_until (Args &&... args, const std::chrono::time_point< Clock, Duration > &timeout) noexcept |

| virtual io_result< const_buffers_type > | barrier (io_request< const_buffers_type > reqs=io_request< const_buffers_type >(), barrier_kind kind=barrier_kind::nowait_data_only, deadline d=deadline()) noexcept |

| Issue a write reordering barrier such that writes preceding the barrier will reach storage before writes after this barrier, preferentially using any i/o multiplexer set over the virtually overridable per-class implementation. | |

| io_result< const_buffers_type > | barrier (barrier_kind kind, deadline d=deadline()) noexcept |

| This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts. | |

| template<class... Args> | |

| bool | try_barrier (Args &&... args) noexcept |

| template<class... Args, class Rep , class Period > | |

| bool | try_barrier_for (Args &&... args, const std::chrono::duration< Rep, Period > &duration) noexcept |

| template<class... Args, class Clock , class Duration > | |

| bool | try_barrier_until (Args &&... args, const std::chrono::time_point< Clock, Duration > &timeout) noexcept |

| QUICKCPPLIB_BITFIELD_BEGIN_T (flag, uint16_t) | |

| Bitwise flags which can be specified. | |

| void | swap (handle &o) noexcept |

| Swap with another instance. | |

| virtual result< path_type > | current_path () const noexcept |

| result< handle > | clone () const noexcept |

| bool | is_valid () const noexcept |

| True if the handle is valid (and usually open) | |

| bool | is_readable () const noexcept |

| True if the handle is readable. | |

| bool | is_writable () const noexcept |

| True if the handle is writable. | |

| bool | is_append_only () const noexcept |

| True if the handle is append only. | |

| virtual result< void > | set_append_only (bool enable) noexcept |

| EXTENSION: Changes whether this handle is append only or not. | |

| bool | is_multiplexable () const noexcept |

| True if multiplexable. | |

| bool | is_nonblocking () const noexcept |

| True if nonblocking. | |

| bool | is_seekable () const noexcept |

| True if seekable. | |

| bool | requires_aligned_io () const noexcept |

| True if requires aligned i/o. | |

| bool | is_kernel_handle () const noexcept |

True if native_handle() is a valid kernel handle. | |

| bool | is_regular () const noexcept |

| True if a regular file or device. | |

| bool | is_directory () const noexcept |

| True if a directory. | |

| bool | is_symlink () const noexcept |

| True if a symlink. | |

| bool | is_pipe () const noexcept |

| True if a pipe. | |

| bool | is_socket () const noexcept |

| True if a socket. | |

| bool | is_multiplexer () const noexcept |

| True if a multiplexer like BSD kqueues, Linux epoll or Windows IOCP. | |

| bool | is_process () const noexcept |

| True if a process. | |

| bool | is_section () const noexcept |

| True if a memory section. | |

| bool | is_allocation () const noexcept |

| True if a memory allocation. | |

| bool | is_path () const noexcept |

| True if a path or a directory. | |

| bool | is_tls_socket () const noexcept |

| True if a TLS socket. | |

| bool | is_http_socket () const noexcept |

| True if a HTTP socket. | |

| caching | kernel_caching () const noexcept |

| Kernel cache strategy used by this handle. | |

| bool | are_reads_from_cache () const noexcept |

| True if the handle uses the kernel page cache for reads. | |

| bool | are_writes_durable () const noexcept |

| True if writes are safely on storage on completion. | |

| bool | are_safety_barriers_issued () const noexcept |

| True if issuing safety fsyncs is on. | |

| flag | flags () const noexcept |

| The flags this handle was opened with. | |

| native_handle_type | native_handle () const noexcept |

| The native handle used by this handle. | |

Static Public Member Functions | |

| static result< map_handle > | map (size_type bytes, bool zeroed=false, section_handle::flag _flag=section_handle::flag::readwrite) noexcept |

| static result< map_handle > | reserve (size_type bytes) noexcept |

| static result< map_handle > | map (section_handle §ion, size_type bytes=0, extent_type offset=0, section_handle::flag _flag=section_handle::flag::readwrite) noexcept |

| static memory_accounting_kind | memory_accounting () noexcept |

| static cache_statistics | trim_cache (std::chrono::steady_clock::time_point older_than={}, size_t max_items=(size_t) -1) noexcept |

| static bool | set_cache_disabled (bool disabled) noexcept |

| static result< span< buffer_type > > | prefetch (span< buffer_type > regions) noexcept |

| static result< buffer_type > | prefetch (buffer_type region) noexcept |

| This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts. | |

Protected Member Functions | |

| map_handle (section_handle *section, section_handle::flag flags) | |

| virtual size_t | _do_max_buffers () const noexcept override |

The virtualised implementation of max_buffers() used. | |

| virtual io_result< const_buffers_type > | _do_barrier (io_request< const_buffers_type > reqs=io_request< const_buffers_type >(), barrier_kind kind=barrier_kind::nowait_data_only, deadline d=deadline()) noexcept override |

| virtual io_result< buffers_type > | _do_read (io_request< buffers_type > reqs, deadline d=deadline()) noexcept override |

| virtual io_result< const_buffers_type > | _do_write (io_request< const_buffers_type > reqs, deadline d=deadline()) noexcept override |

| bool | _recycle_map () noexcept |

| virtual io_result< buffers_type > | _do_read (io_request< buffers_type > reqs, deadline d) noexcept |

The virtualised implementation of read() used if no multiplexer has been set. | |

| virtual io_result< const_buffers_type > | _do_write (io_request< const_buffers_type > reqs, deadline d) noexcept |

The virtualised implementation of write() used. | |

| virtual io_result< const_buffers_type > | _do_barrier (io_request< const_buffers_type > reqs, barrier_kind kind, deadline d) noexcept |

The virtualised implementation of barrier() used. | |

Static Protected Member Functions | |

| static result< map_handle > | _new_map (size_type bytes, bool fallback, section_handle::flag _flag) noexcept |

| static result< map_handle > | _recycled_map (size_type bytes, section_handle::flag _flag) noexcept |

Protected Attributes | |

| section_handle * | _section {nullptr} |

| byte * | _addr {nullptr} |

| extent_type | _offset {0} |

| size_type | _reservation {0} |

| size_type | _length {0} |

| size_type | _pagesize {0} |

| section_handle::flag | _flag {section_handle::flag::none} |

Friends | |

| class | mapped_file_handle |

Detailed Description

A handle to a memory mapped region of memory, either backed by the system page file or by a section.

An important concept to realise with mapped regions is that they can far exceed the size of their backing storage. This allows one to reserve address space for a file which may grow in the future. This is how mapped_file_handle is implemented to provide very fast memory mapped file i/o of a potentially growing file.

The size you specify when creating the map handle is the address space reservation. The map's length() will return the last known valid length of the mapped data i.e. the backing storage's length at the time of construction. This length is used by read() and write() to prevent reading and writing off the end of the mapped region. You can update this length to the backing storage's length using update_map() up to the reservation limit.

You can attempt to modify the address space reservation after creation using truncate(). If successful, this will be more efficient than tearing down the map and creating a new larger map.

The native handle returned by this map handle is always that of the backing storage, but closing this handle does not close that of the backing storage, nor does releasing this handle release that of the backing storage. Locking byte ranges of this handle is therefore equal to locking byte ranges in the original backing storage, which can be very useful.

On Microsoft Windows, when mapping file content, you should try to always create the first map of that file using a writable handle. See mapped_file_handle for more detail on this.

On Linux, be aware that there is a default limit of 65,530 non-contiguous VMAs per process. It is surprisingly easy to run into this limit in real world applications. You can either require users to issue sysctl -w vm.max_map_count=262144 to increase the kernel limit, or take considerable care to never poke holes into large VMAs. .do_not_store() is very useful here for releasing the resources backing pages without decommitting them.

Commit charge:

All virtual memory systems account for memory allocated, even if never used. This is known as "commit charge". All virtual memory systems will not permit more pages to be committed than there is storage for them between RAM and the swap files (except for Linux, where most distributions configure "over commit" in the Linux kernel). This ensures that if the system gives you a committed memory page, you are hard guaranteed that writing into it will not fail. Note that memory mapped files have storage backed by their file contents, so except for pages written into and not yet flushed to storage, memory mapped files usually do not contribute more than a few pages each to commit charge.

- Note

- You can determine the virtual memory accounting model for your system using

map_handle::memory_accounting(). This caches the result of interrogating the system, so it is fast after its first call.

The system commit limit can be easily exceeded if programs commit a lot of memory that they never use. To avoid this, for large allocations you should reserve pages which you don't expect to use immediately, and later explicitly commit and decommit them. You can request pages not accounted against the system commit charge using flag::nocommit. For portability, you should always combine flag::nocommit with flag::none, indeed only Linux permits the allocation of usable pages which are not charged against commit. All other platforms enforce that reserved pages must be unusable, and only pages which are committed are usable.

Separate to whether a page is committed or not is whether it actually consumes resources or not. Pages never written into are not stored by virtual memory systems, and much code when considering the memory consumption of a process only considers the portion of the total commit charge which contains modified pages. This makes sense, given the prevalence of code which commits memory it never uses, however it also leads to anti-social outcomes such as Linux distributions enabling pathological workarounds such as over commit and specialised OOM killers.

Map handle caching

Repeatedly freeing and allocating virtual memory is particularly expensive because page contents must be cleared by the system before they can be handed out again. Most kernels clear pages using an idle loop, but if the system is busy then a surprising amount of CPU time can get consumed wiping pages.

Most users of page allocated memory can tolerate receiving dirty pages, so map_handle implements a process-local cache of previously allocated page regions which have since been close()d. If a new map_handle::map() asks for virtual memory and there is a region in the cache, that region is returned instead of a new region.

Before a region is added to the cache, it is decommitted (except on Linux when overcommit is enabled, see below). It therefore only consumes virtual address space in your process, and does not otherwise consume any resources apart from a VMA entry in the kernel. In particular, it does not appear in your process' RAM consumption (except on Linux). When a region is removed from the cache, it is committed, thus adding it to your process' RAM consumption. During this decommit-recommit process the kernel may choose to scavenge the memory, in which case fresh pages will be restored. However there is a good chance that whatever the pages contained before decommit will still be there after recommit.

Linux has a famously messed up virtual memory implementation. LLFIO implements a strict memory accounting model, and ordinarily we tell Linux what pages are to be counted towards commit charge or not so you don't have to. If overcommit is disabled in the system, you then get identical strict memory accounting like on every other OS.

If however overcommit is enabled, we don't decommit pages, but rather mark them LazyFree. This is to avoid inhibiting VMA coalescing, which is super important on Linux because of its ridiculously low per-process VMA limit typically 64k regions on most installs. Therefore, if you do disable overcommit, you will also need to substantially raise the maximum per process VMA limit as now LLFIO will strictly decommit memory, which prevents VMA coalescing and thus generates lots more VMAs.

The process local map handle cache does not self trim over time, so if you wish to reclaim virtual address space you need to manually call map_handle::trim_cache() from time to time.

Barriers:

map_handle, because it implements byte_io_handle, implements barrier() in a very conservative way to account for OS differences i.e. it calls msync(), and then the barrier() implementation for the backing file (probably fsync() or equivalent on most platforms, which synchronises the entire file).

This is vast overkill if you are using non-volatile RAM, so a special inlined nvram_barrier() implementation taking a single buffer and no other arguments is also provided as a free function. This calls the appropriate architecture-specific instructions to cause the CPU to write all preceding writes out of the write buffers and CPU caches to main memory, so for Intel CPUs this would be CLWB <each cache line>; SFENCE;. As this is inlined, it ought to produce optimal code. If your CPU does not support the requisite instructions (or LLFIO has not added support), and empty buffer will be returned to indicate that nothing was barriered, same as the normal barrier() function.

Large page support:

Large, huge, massive and super page support is available via the section_handle::flag::page_sizes_N flags. Use these in combination with utils::page_size() to request allocations or maps which use different page sizes.

Windows:

Firstly, explicit large page support is only available to processes and logged in users who have been assigned the SeLockMemoryPrivilege. A default Windows installation assigns that privilege to nothing, so explicit action will need to be taken to assign that privilege per Windows installation.

Secondly, as of Windows 10 1803, there is the large page size or the normal page size. There isn't (useful) support for pages of any other size, as there is on other systems.

For allocating memory, large page allocation can randomly fail depending on what the system is feeling like, same as on all the other operating systems. It is not permitted to reserve address space using large pages.

For mapping files, large page maps do not work as of Windows 10 1803 (curiously, ReactOS does implement this). There is a big exception to this, which is for DAX formatted NTFS volumes with a formatted cluster size of the large page size, where if you map in large page sized multiples, the Windows kernel uses large pages (and one need not hold SeLockMemoryPrivilege either). Therefore, if you specify section_handle::flag::nvram with a section_handle::flag::page_sizes_N, LLFIO does not ask for large pages which would fail, it merely rounds all requests up to the nearest large page multiple.

Linux:

As usual on Linux, large page (often called huge page on Linux) support comes in many forms.

Explicit support is via MAP_HUGETLB to mmap(), and whether an explicit request succeeds or not is up to how many huge pages were configured into the running system via boot-time kernel parameters, and how many huge pages are in use already. For most recent kernels on most distributions, explicit memory allocation requests using large pages generally works without issue. As of Linux kernel 4.18, mapping files using large pages only works on tmpfs, this corresponds to path_discovery::memory_backed_temporary_files_directory() sourced anonymous section handles. Work is proceeding well for the major Linux filing systems to become able to map files using large pages soon, and in theory LLFIO based should "just work" on such a newer kernel.

Note that some distributions enable transparent huge pages, whereby if you request allocations of large page multiples at large page offsets, the kernel uses large pages, without you needing to specify any section_handle::flag::page_sizes_N. Almost all distributions enable opt-in transparent huge pages, where you can explicitly request that pages within a region of memory transparently use huge pages as much as possible. LLFIO does not expose such facilities, you will need to manually invoke madvise(MADV_HUGEPAGE) on the region desired.

FreeBSD:

FreeBSD has no support for failing if large pages cannot be used for a specific mmap(). The best you can do is to ask for large pages, and you may or may not get them depending on available system resources, filing system in use, etc. LLFIO does not check returned maps to discover if large pages were actually used, that is on end user code to check if it really needs to know.

MacOS:

MacOS only supports large pages for memory allocations, not for mapping files. It fails if large pages could not be used when a large page allocation was requested.

- See also

mapped_file_handle,algorithm::mapped_span

Member Enumeration Documentation

◆ barrier_kind

|

stronginherited |

The kinds of write reordering barrier which can be performed.

◆ memory_accounting_kind

|

strong |

The kind of memory accounting this system uses.

Constructor & Destructor Documentation

◆ map_handle() [1/4]

|

inlineexplicitprotected |

◆ map_handle() [2/4]

|

inlineconstexpr |

Default constructor.

◆ map_handle() [3/4]

|

inlineexplicitnoexcept |

Construct an instance managing pages at addr, length, pagesize and flags

◆ map_handle() [4/4]

|

inlineconstexprnoexcept |

Implicit move construction of map_handle permitted.

Member Function Documentation

◆ _do_max_buffers()

|

inlineoverrideprotectedvirtualnoexcept |

The virtualised implementation of max_buffers() used.

Reimplemented from llfio_v2_xxx::byte_io_handle.

◆ address()

|

inlinenoexcept |

The address in memory where this mapped view resides.

◆ are_reads_from_cache()

|

inlinenoexceptinherited |

True if the handle uses the kernel page cache for reads.

◆ are_safety_barriers_issued()

|

inlinenoexceptinherited |

True if issuing safety fsyncs is on.

◆ are_writes_durable()

|

inlinenoexceptinherited |

True if writes are safely on storage on completion.

◆ as_span() [1/2]

|

inlinenoexcept |

This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts.

◆ as_span() [2/2]

|

inlinenoexcept |

The memory map as a span of bytes.

◆ barrier() [1/2]

|

inlinenoexceptinherited |

This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts.

◆ barrier() [2/2]

|

inlinevirtualnoexceptinherited |

Issue a write reordering barrier such that writes preceding the barrier will reach storage before writes after this barrier, preferentially using any i/o multiplexer set over the virtually overridable per-class implementation.

- Warning

- Assume that this call is a no-op. It is not reliably implemented in many common use cases, for example if your code is running inside a LXC container, or if the user has mounted the filing system with non-default options. Instead open the handle with

caching::readswhich means that all writes form a strict sequential order not completing until acknowledged by the storage device. Filing system can and do use different algorithms to give much better performance withcaching::reads, some (e.g. ZFS) spectacularly better. - Let me repeat again: consider this call to be a hint to poke the kernel with a stick to go start to do some work sooner rather than later. It may be ignored entirely.

- For portability, you can only assume that barriers write order for a single handle instance. You cannot assume that barriers write order across multiple handles to the same inode, or across processes.

- Returns

- The buffers barriered, which may not be the buffers input. The size of each scatter-gather buffer is updated with the number of bytes of that buffer barriered.

- Parameters

-

reqs A scatter-gather and offset request for what range to barrier. May be ignored on some platforms which always write barrier the entire file. Supplying a default initialised reqs write barriers the entire file. kind Which kind of write reordering barrier to perform. d An optional deadline by which the i/o must complete, else it is cancelled. Note function may return significantly after this deadline if the i/o takes long to cancel.

- Errors returnable

- Any of the values POSIX fdatasync() or Windows NtFlushBuffersFileEx() can return.

- Memory Allocations

- None.

◆ capacity()

|

inlinenoexcept |

The reservation size of the memory map.

◆ clone()

|

noexceptinherited |

Clone this handle (copy constructor is disabled to avoid accidental copying)

- Errors returnable

- Any of the values POSIX dup() or DuplicateHandle() can return.

◆ close()

|

overridevirtualnoexcept |

Unmap the mapped view.

Reimplemented from llfio_v2_xxx::handle.

◆ commit()

|

noexcept |

Ask the system to commit the system resources to make the memory represented by the buffer available with the given permissions. addr and length should be page aligned (see page_size()), if not the returned buffer is the region actually committed.

◆ current_path()

|

virtualnoexceptinherited |

Returns the current path of the open handle as said by the operating system. Note that you are NOT guaranteed that any path refreshed bears any resemblance to the original, some operating systems will return some different path which still reaches the same inode via some other route e.g. hardlinks, dereferenced symbolic links, etc. Windows and Linux correctly track changes to the specific path the handle was opened with, not getting confused by other hard links. MacOS nearly gets it right, but under some circumstances e.g. renaming may switch to a different hard link's path which is almost certainly a bug.

If LLFIO was not able to determine the current path for this open handle e.g. the inode has been unlinked, it returns an empty path. Be aware that FreeBSD can return an empty (deleted) path for file inodes no longer cached by the kernel path cache, LLFIO cannot detect the difference. FreeBSD will also return any path leading to the inode if it is hard linked. FreeBSD does implement path retrieval for directory inodes correctly however, and see algorithm::cached_parent_handle_adapter<T> for a handle adapter which makes use of that.

On Linux if /proc is not mounted, this call fails with an error. All APIs in LLFIO which require the use of current_path() can be told to not use it e.g. flag::disable_safety_unlinks. It is up to you to detect if current_path() is not working, and to change how you call LLFIO appropriately.

On Windows, you will almost certainly get back a path of the form \!!\Device\HarddiskVolume10\Users\ned\.... See path_view for what all the path prefix sequences mean, but to summarise the \!!\ prefix is LLFIO-only and will not be accepted by other Windows APIs. Pass LLFIO derived paths through the function to_win32_path() to Win32-ise them. This function is also available on Linux where it does nothing, so you can use it in portable code.

- Warning

- This call is expensive, it always asks the kernel for the current path, and no checking is done to ensure what the kernel returns is accurate or even sensible. Be aware that despite these precautions, paths are unstable and can change randomly at any moment. Most code written to use absolute file systems paths is racy, so don't do it, use

path_handleto fix a base location on the file system and work from that anchor instead!

- Memory Allocations

- At least one malloc for the

path_type, likely several more.

- See also

algorithm::cached_parent_handle_adapter<T>which overrides this with an implementation based on retrieving the current path of a cached handle to the parent directory. On platforms with instability or failure to retrieve the correct current path for regular files, the cached parent handle adapter works around the problem by taking advantage of directory inodes not having the same instability problems on any platform.

Reimplemented in llfio_v2_xxx::process_handle.

◆ decommit()

|

noexcept |

Ask the system to make the memory represented by the buffer unavailable and to decommit the system resources representing them. addr and length should be page aligned (see page_size()), if not the returned buffer is the region actually decommitted.

◆ do_not_store()

|

noexcept |

Ask the system to unset the dirty flag for the memory represented by the buffer. This will prevent any changes not yet sent to the backing storage from being sent in the future, also if the system kicks out this page and reloads it you may see some edition of the underlying storage instead of what was here. addr and length should be page aligned (seepage_size()), if not the returned buffer is the region actually undirtied.

Note that commit charge is not affected by this operation, as writes into the undirtied pages are guaranteed to succeed.

You should be aware that on Microsoft Windows, the platform syscall for discarding virtual memory pages becomes hideously slow when called upon committed pages within a large address space reservation. All three syscalls were trialled, and the least worst is actually DiscardVirtualMemory() which is what this function uses. However it still has exponential slow down as more pages within a large reservation become committed e.g. 8Gb committed within a 2Tb reservation is approximately 20x slower than when < 1Gb is committed. Non-Windows platforms do not have this problem.

- Warning

- This function destroys the contents of unwritten pages in the region in a totally unpredictable fashion. Only use it if you don't care how much of the region reaches physical storage or not. Note that the region is not necessarily zeroed, and may be randomly zeroed.

- Note

- Microsoft Windows does not support unsetting the dirty flag on file backed maps, so on Windows this call does nothing.

◆ flags()

|

inlinenoexceptinherited |

The flags this handle was opened with.

◆ is_allocation()

|

inlinenoexceptinherited |

True if a memory allocation.

◆ is_append_only()

|

inlinenoexceptinherited |

True if the handle is append only.

◆ is_directory()

|

inlinenoexceptinherited |

True if a directory.

◆ is_http_socket()

|

inlinenoexceptinherited |

True if a HTTP socket.

◆ is_kernel_handle()

|

inlinenoexceptinherited |

True if native_handle() is a valid kernel handle.

◆ is_multiplexable()

|

inlinenoexceptinherited |

True if multiplexable.

◆ is_multiplexer()

|

inlinenoexceptinherited |

True if a multiplexer like BSD kqueues, Linux epoll or Windows IOCP.

◆ is_nonblocking()

|

inlinenoexceptinherited |

True if nonblocking.

◆ is_nvram()

|

inlinenoexcept |

True if the map is of non-volatile RAM.

◆ is_path()

|

inlinenoexceptinherited |

True if a path or a directory.

◆ is_pipe()

|

inlinenoexceptinherited |

True if a pipe.

◆ is_process()

|

inlinenoexceptinherited |

True if a process.

◆ is_readable()

|

inlinenoexceptinherited |

True if the handle is readable.

◆ is_regular()

|

inlinenoexceptinherited |

True if a regular file or device.

◆ is_section()

|

inlinenoexceptinherited |

True if a memory section.

◆ is_seekable()

|

inlinenoexceptinherited |

True if seekable.

◆ is_socket()

|

inlinenoexceptinherited |

True if a socket.

◆ is_symlink()

|

inlinenoexceptinherited |

True if a symlink.

◆ is_tls_socket()

|

inlinenoexceptinherited |

True if a TLS socket.

◆ is_valid()

|

inlinenoexceptinherited |

True if the handle is valid (and usually open)

◆ is_writable()

|

inlinenoexceptinherited |

True if the handle is writable.

◆ kernel_caching()

|

inlinenoexceptinherited |

Kernel cache strategy used by this handle.

◆ length()

|

inlinenoexcept |

The size of the memory map. This is the accessible size, NOT the reservation size.

◆ lock_file()

|

virtualnoexceptinherited |

Locks the inode referred to by the open handle for exclusive access.

Note that this may, or may not, interact with the byte range lock extensions. See unique_file_lock for a RAII locker.

- Errors returnable

- Any of the values POSIX

flock()can return.

- Memory Allocations

- The default synchronous implementation in

file_handleperforms no memory allocation.

◆ lock_file_range() [1/3]

|

virtualnoexceptinherited |

EXTENSION: Tries to lock the range of bytes specified for shared or exclusive access. Note that this may, or MAY NOT, observe whole file locks placed with lock(), lock_shared() etc.

Be aware this passes through the same semantics as the underlying OS call, including any POSIX insanity present on your platform:

- Any fd closed on an inode must release all byte range locks on that inode for all other fds. If your OS isn't new enough to support the non-insane lock API,

flag::byte_lock_insanitywill be set in flags() after the first call to this function. - Threads replace each other's locks, indeed locks replace each other's locks.

You almost cetainly should use your choice of an algorithm::shared_fs_mutex::* instead of this as those are more portable and performant, or use the SharedMutex modelling member functions which lock the whole inode for exclusive or shared access.

- Warning

- This is a low-level API which you should not use directly in portable code. Another issue is that atomic lock upgrade/downgrade, if your platform implements that (you should assume it does not in portable code), means that on POSIX you need to release the old

extent_guardafter creating a new one over the same byte range, otherwise the oldextent_guard's destructor will simply unlock the range entirely. On Windows however upgrade/downgrade locks overlay, so on that platform you must not release the oldextent_guard. Look intoalgorithm::shared_fs_mutex::safe_byte_rangesfor a portable solution.

- Returns

- An extent guard, the destruction of which will call unlock().

- Parameters

-

offset The offset to lock. Note that on POSIX the top bit is always cleared before use as POSIX uses signed transport for offsets. If you want an advisory rather than mandatory lock on Windows, one technique is to force top bit set so the region you lock is not the one you will i/o - obviously this reduces maximum file size to (2^63)-1. bytes The number of bytes to lock. Setting this and the offset to zero causes the whole file to be locked. kind Whether the lock is to be shared or exclusive. d An optional deadline by which the lock must complete, else it is cancelled.

- Errors returnable

- Any of the values POSIX fcntl() can return,

errc::timed_out,errc::not_supportedmay be returned if deadline i/o is not possible with this particular handle configuration (e.g. non-overlapped HANDLE on Windows).

- Memory Allocations

- The default synchronous implementation in file_handle performs no memory allocation.

Reimplemented in llfio_v2_xxx::fast_random_file_handle.

◆ lock_file_range() [2/3]

|

inlinenoexceptinherited |

This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts.

◆ lock_file_range() [3/3]

|

inlinenoexceptinherited |

This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts.

◆ lock_file_shared()

|

virtualnoexceptinherited |

Locks the inode referred to by the open handle for shared access.

Note that this may, or may not, interact with the byte range lock extensions. See unique_file_lock for a RAII locker.

- Errors returnable

- Any of the values POSIX

flock()can return.

- Memory Allocations

- The default synchronous implementation in

file_handleperforms no memory allocation.

◆ map() [1/2]

|

staticnoexcept |

Create a memory mapped view of a backing storage, optionally reserving additional address space for later growth.

- Parameters

-

section A memory section handle specifying the backing storage to use. bytes How many bytes to reserve (0 = the size of the section). Rounded up to nearest 64Kb on Windows. offset The offset into the backing storage to map from. This can be byte granularity, but be careful if you use non-pagesize offsets (see below). _flag The permissions with which to map the view which are constrained by the permissions of the memory section. flag::nonecan be useful for reserving virtual address space without committing system resources, usecommit()to later change availability of memory. Note that apart from read/write/cow/execute, the section's flags override the map's flags.

- Errors returnable

- Any of the values POSIX

mmap()orNtMapViewOfSection()can return.

◆ map() [2/2]

|

inlinestaticnoexcept |

Map unused memory into view, creating new memory if insufficient unused memory is available (i.e. add the returned memory to the process' commit charge, unless flag::nocommit was specified). Note that the memory mapped by this call may contain non-zero bits (recycled memory) unless zeroed is true.

- Parameters

-

bytes How many bytes to map. Typically will be rounded up to a multiple of the page size (see page_size()).zeroed Set to true if only all bits zeroed memory is wanted. If this is true, a syscall is always performed as the kernel probably has zeroed pages ready to go, whereas if false, the request may be satisfied from a local cache instead. The default is false. _flag The permissions with which to map the view.

- Note

- On Microsoft Windows this constructor uses the faster

VirtualAlloc()which creates less versatile page backed memory. If you want anonymous memory allocated from a paging file backed section instead, create a page file backed section and then a mapped view from that using the other constructor. This makes available all those very useful VM tricks Windows can do with section mapped memory whichVirtualAlloc()memory cannot do.

When this kind of map handle is closed, it is added to an internal cache so new map handle creations of this kind with zeroed = false are very quick and avoid a syscall. The internal cache may return a map slightly bigger than requested. If you wish to always invoke the syscall, specify zeroed = true.

When maps are added to the internal cache, on all systems except Linux the memory is decommitted first. This reduces commit charge appropriately, thus only virtual address space remains consumed. On Linux, if memory_accounting() is memory_accounting_kind::commit_charge, we also decommit, however be aware that this can increase the average VMA use count in the Linux kernel, and most Linux kernels are configured with a very low per-process limit of 64k VMAs (this is easy to raise using sysctl -w vm.max_map_count=262144). Otherwise on Linux to avoid increasing VMA count we instead mark closed maps as LazyFree, which means that their contents can be arbitrarily disposed of by the Linux kernel as needed, but also allows Linux to coalesce VMAs so the very low per-process limit is less likely to be exceeded. If the LazyFree syscall is not implemented on this Linux, we do nothing.

- Warning

- The cache does not self-trim on its own, you MUST call

trim_cache()to trim allocations of virtual address (these don't count towards process commit charge, but they do consume address space and precious VMAs in the Linux kernel). Only on 32 bit processes where virtual address space is limited, or on Linux where VMAs allocated is considered by the Linux OOM killer, will you need to probably care much about regular cache trimming.

- Errors returnable

- Any of the values POSIX

mmap()orVirtualAlloc()can return.

◆ max_buffers()

|

inlinenoexceptinherited |

The maximum number of buffers which a single read or write syscall can (atomically) process at a time for this specific open handle. On POSIX, this is known as IOV_MAX. Preferentially uses any i/o multiplexer set over the virtually overridable per-class implementation.

Note that the actual number of buffers accepted for a read or a write may be significantly lower than this system-defined limit, depending on available resources. The read() or write() call will return the buffers accepted at the time of invoking the syscall.

Note also that some OSs will error out if you supply more than this limit to read() or write(), but other OSs do not. Some OSs guarantee that each i/o syscall has effects atomically visible or not to other i/o, other OSs do not.

OS X does not implement scatter-gather file i/o syscalls. Thus this function will always return 1 in that situation.

Microsoft Windows may implement scatter-gather i/o under certain handle configurations. Most of the time for non-socket handles this function will return 1.

For handles which implement i/o entirely in user space, and thus syscalls are not involved, this function will return 0.

◆ memory_accounting()

|

inlinestaticnoexcept |

◆ native_handle()

|

inlinenoexceptinherited |

The native handle used by this handle.

◆ offset()

|

inlinenoexcept |

The offset of the memory map.

◆ operator=()

|

inlinenoexcept |

Move assignment of map_handle permitted.

◆ page_size()

|

inlinenoexcept |

The page size used by the map, in bytes.

◆ prefetch() [1/2]

|

inlinestaticnoexcept |

This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts.

◆ prefetch() [2/2]

|

staticnoexcept |

Ask the system to begin to asynchronously prefetch the span of memory regions given, returning the regions actually prefetched. Note that on Windows 7 or earlier the system call to implement this was not available, and so you will see an empty span returned.

◆ QUICKCPPLIB_BITFIELD_BEGIN_T()

|

inlineinherited |

Bitwise flags which can be specified.

< No flags

Unlinks the file on handle close. On POSIX, this simply unlinks whatever is pointed to by path() upon the call of close() if and only if the inode matches. On Windows, if you are on Windows 10 1709 or later, exactly the same thing occurs. If on previous editions of Windows, the file entry does not disappears but becomes unavailable for anyone else to open with an errc::resource_unavailable_try_again error return. Because this is confusing, unless the win_disable_unlink_emulation flag is also specified, this POSIX behaviour is somewhat emulated by LLFIO on older Windows by renaming the file to a random name on close() causing it to appear to have been unlinked immediately.

Some kernel caching modes have unhelpfully inconsistent behaviours in getting your data onto storage, so by default unless this flag is specified LLFIO adds extra fsyncs to the following operations for the caching modes specified below: truncation of file length either explicitly or during file open. closing of the handle either explicitly or in the destructor.

Additionally on Linux only to prevent loss of file metadata: On the parent directory whenever a file might have been created. On the parent directory on file close.

This only occurs for these kernel caching modes: caching::none caching::reads caching::reads_and_metadata caching::safety_barriers

file_handle::unlink() could accidentally delete the wrong file if someone has renamed the open file handle since the time it was opened. To prevent this occuring, where the OS doesn't provide race free unlink-by-open-handle we compare the inode of the path we are about to unlink with that of the open handle before unlinking.

- Warning

- This does not prevent races where in between the time of checking the inode and executing the unlink a third party changes the item about to be unlinked. Only operating systems with a true race-free unlink syscall are race free.

Ask the OS to disable prefetching of data. This can improve random i/o performance.

Ask the OS to maximise prefetching of data, possibly prefetching the entire file into kernel cache. This can improve sequential i/o performance.

< See the documentation for unlink_on_first_close

Microsoft Windows NTFS, having been created in the late 1980s, did not originally implement extents-based storage and thus could only represent sparse files via efficient compression of intermediate zeros. With NTFS v3.0 (Microsoft Windows 2000), a proper extents-based on-storage representation was added, thus allowing only 64Kb extent chunks written to be stored irrespective of whatever the maximum file extent was set to.

For various historical reasons, extents-based storage is disabled by default in newly created files on NTFS, unlike in almost every other major filing system. You have to explicitly "opt in" to extents-based storage.

As extents-based storage is nearly cost free on NTFS, LLFIO by default opts in to extents-based storage for any empty file it creates. If you don't want this, you can specify this flag to prevent that happening.

Filesystems tend to be embarrassingly parallel for operations performed to different inodes. Where LLFIO performs i/o to multiple inodes at a time, it will use OpenMP or the Parallelism or Concurrency standard library extensions to usually complete the operation in constant rather than linear time. If you don't want this default, you can disable default using this flag.

Microsoft Windows NTFS has the option, when creating a directory, to set whether leafname lookup will be case sensitive. This is the only way of getting exact POSIX semantics on Windows without resorting to editing the system registry, however it also affects all code doing lookups within that directory, so we must default it to off.

Create the handle in a way where i/o upon it can be multiplexed with other i/o on the same initiating thread of execution i.e. you can perform more than one read concurrently, without using threads. The blocking operations .read() and .write() may have to use a less efficient, but cancellable, blocking implementation for handles created in this way. On Microsoft Windows, this creates handles with OVERLAPPED semantics. On POSIX, this creates handles with nonblocking semantics for non-file handles such as pipes and sockets, however for file, directory and symlink handles it does not set nonblocking, as it is non-portable.

< Using insane POSIX byte range locks

< This is an inode created with no representation on the filing system

◆ read() [1/3]

|

inlinenoexcept |

This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts.

◆ read() [2/3]

|

inlinenoexceptinherited |

Read data from the open handle, preferentially using any i/o multiplexer set over the virtually overridable per-class implementation.

- Warning

- Depending on the implementation backend, very different buffers may be returned than you supplied. You should always use the buffers returned and assume that they point to different memory and that each buffer's size will have changed.

- Returns

- The buffers read, which may not be the buffers input. The size of each scatter-gather buffer returned is updated with the number of bytes of that buffer transferred, and the pointer to the data may be completely different to what was submitted (e.g. it may point into a memory map).

- Parameters

-

reqs A scatter-gather and offset request. d An optional deadline by which the i/o must complete, else it is cancelled. Note function may return significantly after this deadline if the i/o takes long to cancel.

- Errors returnable

- Any of the values POSIX read() can return,

errc::timed_out,errc::operation_canceled.errc::not_supportedmay be returned if deadline i/o is not possible with this particular handle configuration (e.g. reading from regular files on POSIX or reading from a non-overlapped HANDLE on Windows).

- Memory Allocations

- The default synchronous implementation in file_handle performs no memory allocation.

◆ read() [3/3]

|

inlinenoexcept |

Read data from the open handle, preferentially using any i/o multiplexer set over the virtually overridable per-class implementation.

- Warning

- Depending on the implementation backend, very different buffers may be returned than you supplied. You should always use the buffers returned and assume that they point to different memory and that each buffer's size will have changed.

- Returns

- The buffers read, which may not be the buffers input. The size of each scatter-gather buffer returned is updated with the number of bytes of that buffer transferred, and the pointer to the data may be completely different to what was submitted (e.g. it may point into a memory map).

- Parameters

-

reqs A scatter-gather and offset request. d An optional deadline by which the i/o must complete, else it is cancelled. Note function may return significantly after this deadline if the i/o takes long to cancel.

- Errors returnable

- Any of the values POSIX read() can return,

errc::timed_out,errc::operation_canceled.errc::not_supportedmay be returned if deadline i/o is not possible with this particular handle configuration (e.g. reading from regular files on POSIX or reading from a non-overlapped HANDLE on Windows).

- Memory Allocations

- The default synchronous implementation in file_handle performs no memory allocation.

◆ release()

|

overridevirtualnoexcept |

Releases the mapped view, but does NOT release the native handle.

Reimplemented from llfio_v2_xxx::handle.

◆ requires_aligned_io()

|

inlinenoexceptinherited |

True if requires aligned i/o.

◆ reserve()

|

inlinestaticnoexcept |

Reserve address space within which individual pages can later be committed. Reserved address space is NOT added to the process' commit charge.

- Parameters

-

bytes How many bytes to reserve. Rounded up to nearest 64Kb on Windows.

- Note

- On Microsoft Windows this constructor uses the faster

VirtualAlloc()which creates less versatile page backed memory. If you want anonymous memory allocated from a paging file backed section instead, create a page file backed section and then a mapped view from that using the other constructor. This makes available all those very useful VM tricks Windows can do with section mapped memory whichVirtualAlloc()memory cannot do.

- Errors returnable

- Any of the values POSIX

mmap()orVirtualAlloc()can return.

◆ section()

|

inlinenoexcept |

The memory section this handle is using.

◆ set_append_only()

|

virtualnoexceptinherited |

EXTENSION: Changes whether this handle is append only or not.

- Warning

- On Windows this is implemented as a bit of a hack to make it fast like on POSIX, so make sure you open the handle for read/write originally. Note unlike on POSIX the append_only disposition will be the only one toggled, seekable and readable will remain turned on.

- Errors returnable

- Whatever POSIX fcntl() returns. On Windows nothing is changed on the handle.

- Memory Allocations

- No memory allocation.

Reimplemented in llfio_v2_xxx::process_handle.

◆ set_cache_disabled()

|

staticnoexcept |

Disable the map handle cache, returning its previous setting. Note that you may also wish to explicitly trim the cache.

◆ set_section()

|

inlinenoexcept |

Sets the memory section this handle is using.

◆ swap() [1/2]

|

inlinenoexceptinherited |

Swap with another instance.

◆ swap() [2/2]

|

inlinenoexcept |

Swap with another instance.

◆ trim_cache()

|

staticnoexcept |

Get statistics about the map handle cache, optionally trimming the least recently used maps.

◆ truncate()

|

noexcept |

Resize the reservation of the memory map without changing the address (unless the map was zero sized, in which case a new address will be chosen).

If shrinking, address space is released on POSIX, and on Windows if the new size is zero. If the new size is zero, the address is set to null to prevent surprises. Windows does not support modifying existing mapped regions, so if the new size is not zero, the call will probably fail. Windows should let you truncate a previous extension however, if it is exact.

If expanding, an attempt is made to map in new reservation immediately after the current address reservation, thus extending the reservation. If anything else is mapped in after the current reservation, the function fails.

- Note

- On all supported platforms apart from OS X, proprietary flags exist to avoid performing a map if a map extension cannot be immediately placed after the current map. On OS X, we hint where we'd like the new map to go, but if something is already there OS X will place the map elsewhere. In this situation, we delete the new map and return failure, which is inefficient, but there is nothing else we can do.

- Returns

- The bytes actually reserved.

- Parameters

-

newsize The bytes to truncate the map reservation to. Rounded up to the nearest page size (POSIX) or 64Kb on Windows. permit_relocation Permit the address to change (some OSs provide a syscall for resizing a memory map).

- Errors returnable

- Any of the values POSIX

mremap(),mmap(addr)orVirtualAlloc(addr)can return.

◆ try_barrier()

|

inlinenoexceptinherited |

◆ try_barrier_for()

|

inlinenoexceptinherited |

◆ try_barrier_until()

|

inlinenoexceptinherited |

◆ try_lock_file()

|

virtualnoexceptinherited |

Tries to lock the inode referred to by the open handle for exclusive access, returning false if lock is currently unavailable.

Note that this may, or may not, interact with the byte range lock extensions. See unique_file_lock for a RAII locker.

- Errors returnable

- Any of the values POSIX

flock()can return.

- Memory Allocations

- The default synchronous implementation in

file_handleperforms no memory allocation.

◆ try_lock_file_range()

|

inlinenoexceptinherited |

◆ try_lock_file_range_for()

|

inlinenoexceptinherited |

◆ try_lock_file_range_until()

|

inlinenoexceptinherited |

◆ try_lock_file_shared()

|

virtualnoexceptinherited |

Tries to lock the inode referred to by the open handle for shared access, returning false if lock is currently unavailable.

Note that this may, or may not, interact with the byte range lock extensions. See unique_file_lock for a RAII locker.

- Errors returnable

- Any of the values POSIX

flock()can return.

- Memory Allocations

- The default synchronous implementation in

file_handleperforms no memory allocation.

◆ try_read()

|

inlinenoexceptinherited |

◆ try_read_for()

|

inlinenoexceptinherited |

◆ try_read_until()

|

inlinenoexceptinherited |

◆ try_write()

|

inlinenoexceptinherited |

◆ try_write_for()

|

inlinenoexceptinherited |

◆ try_write_until()

|

inlinenoexceptinherited |

◆ unlock_file_range()

|

virtualnoexceptinherited |

EXTENSION: Unlocks a byte range previously locked.

- Parameters

-

offset The offset to unlock. This should be an offset previously locked. bytes The number of bytes to unlock. This should be a byte extent previously locked.

- Errors returnable

- Any of the values POSIX fcntl() can return.

- Memory Allocations

- None.

Reimplemented in llfio_v2_xxx::fast_random_file_handle.

◆ update_map()

|

inlinenoexcept |

Update the size of the memory map to that of any backing section, up to the reservation limit.

◆ write() [1/3]

|

inlinenoexcept |

This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts.

◆ write() [2/3]

|

inlinenoexceptinherited |

Write data to the open handle, preferentially using any i/o multiplexer set over the virtually overridable per-class implementation.

- Warning

- Depending on the implementation backend, not all of the buffers input may be written. For example, with a zeroed deadline, some backends may only consume as many buffers as the system has available write slots for, thus for those backends this call is "non-blocking" in the sense that it will return immediately even if it could not schedule a single buffer write. Another example is that some implementations will not auto-extend the length of a file when a write exceeds the maximum extent, you will need to issue a

truncate(newsize)first.

- Returns

- The buffers written, which may not be the buffers input. The size of each scatter-gather buffer returned is updated with the number of bytes of that buffer transferred.

- Parameters

-

reqs A scatter-gather and offset request. d An optional deadline by which the i/o must complete, else it is cancelled. Note function may return significantly after this deadline if the i/o takes long to cancel.

- Errors returnable

- Any of the values POSIX write() can return,

errc::timed_out,errc::operation_canceled.errc::not_supportedmay be returned if deadline i/o is not possible with this particular handle configuration (e.g. writing to regular files on POSIX or writing to a non-overlapped HANDLE on Windows).

- Memory Allocations

- The default synchronous implementation in file_handle performs no memory allocation.

◆ write() [3/3]

|

inlinenoexcept |

Write data to the open handle, preferentially using any i/o multiplexer set over the virtually overridable per-class implementation.

- Warning

- Depending on the implementation backend, not all of the buffers input may be written. For example, with a zeroed deadline, some backends may only consume as many buffers as the system has available write slots for, thus for those backends this call is "non-blocking" in the sense that it will return immediately even if it could not schedule a single buffer write. Another example is that some implementations will not auto-extend the length of a file when a write exceeds the maximum extent, you will need to issue a

truncate(newsize)first.

- Returns

- The buffers written, which may not be the buffers input. The size of each scatter-gather buffer returned is updated with the number of bytes of that buffer transferred.

- Parameters

-

reqs A scatter-gather and offset request. d An optional deadline by which the i/o must complete, else it is cancelled. Note function may return significantly after this deadline if the i/o takes long to cancel.

- Errors returnable

- Any of the values POSIX write() can return,

errc::timed_out,errc::operation_canceled.errc::not_supportedmay be returned if deadline i/o is not possible with this particular handle configuration (e.g. writing to regular files on POSIX or writing to a non-overlapped HANDLE on Windows).

- Memory Allocations

- The default synchronous implementation in file_handle performs no memory allocation.

◆ zero_memory()

|

noexcept |

Zero the memory represented by the buffer. Differs from zero() because it acts on mapped memory, not on allocated file extents.

On Linux only, any full 4Kb pages will be deallocated from the system entirely, including the extents for them in any backing storage. On newer Linux kernels the kernel can additionally swap whole 4Kb pages for freshly zeroed ones making this a very efficient way of zeroing large ranges of memory. Note that commit charge is not affected by this operation, as writes into the zeroed pages are guaranteed to succeed.

On Windows and Mac OS, this call currently only has an effect for non-backed memory due to lacking kernel support.

- Errors returnable

- Any of the errors returnable by madvise() or DiscardVirtualMemory or the zero() function.

Member Data Documentation

◆ _addr

|

protected |

◆ _flag

|

protected |

◆ _length

|

protected |

◆ _offset

|

protected |

◆ _pagesize

|

protected |

◆ _reservation

|

protected |

◆ _section

|

protected |

The documentation for this class was generated from the following file:

- include/llfio/v2.0/map_handle.hpp