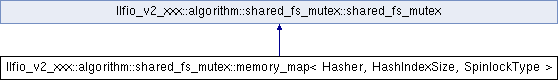

Many entity memory mapped shared/exclusive file system based lock. More...

#include "memory_map.hpp"

Classes | |

| struct | _entity_idx |

Public Types | |

| using | entity_type = shared_fs_mutex::entity_type |

| The type of an entity id. | |

| using | entities_type = shared_fs_mutex::entities_type |

| The type of a sequence of entities. | |

| using | hasher_type = Hasher< entity_type::value_type > |

| The type of the hasher being used. | |

| using | spinlock_type = SpinlockType |

| The type of the spinlock being used. | |

Public Member Functions | |

| memory_map (const memory_map &)=delete | |

| No copy construction. | |

| memory_map & | operator= (const memory_map &)=delete |

| No copy assignment. | |

| memory_map (memory_map &&o) noexcept | |

| Move constructor. | |

| memory_map & | operator= (memory_map &&o) noexcept |

| Move assign. | |

| const file_handle & | handle () const noexcept |

| Return the handle to file being used for this lock. | |

| virtual void | unlock (entities_type entities, unsigned long long) noexcept final |

| Unlock a previously locked sequence of entities. | |

| entity_type | entity_from_buffer (const char *buffer, size_t bytes, bool exclusive=true) noexcept |

| Generates an entity id from a sequence of bytes. | |

| template<typename T > | |

| entity_type | entity_from_string (const std::basic_string< T > &str, bool exclusive=true) noexcept |

| Generates an entity id from a string. | |

| entity_type | random_entity (bool exclusive=true) noexcept |

| Generates a cryptographically random entity id. | |

| void | fill_random_entities (span< entity_type > seq, bool exclusive=true) noexcept |

| Fills a sequence of entity ids with cryptographic randomness. Much faster than calling random_entity() individually. | |

| result< entities_guard > | lock (entities_type entities, deadline d=deadline(), bool spin_not_sleep=false) noexcept |

| Lock all of a sequence of entities for exclusive or shared access. | |

| result< entities_guard > | lock (entity_type entity, deadline d=deadline(), bool spin_not_sleep=false) noexcept |

| Lock a single entity for exclusive or shared access. | |

| result< entities_guard > | try_lock (entities_type entities) noexcept |

| Try to lock all of a sequence of entities for exclusive or shared access. | |

| result< entities_guard > | try_lock (entity_type entity) noexcept |

| Try to lock a single entity for exclusive or shared access. | |

Static Public Member Functions | |

| static result< memory_map > | fs_mutex_map (const path_handle &base, path_view lockfile) noexcept |

Protected Member Functions | |

| virtual result< void > | _lock (entities_guard &out, deadline d, bool spin_not_sleep) noexcept final |

Static Protected Member Functions | |

| static span< _entity_idx > | _hash_entities (_entity_idx *entity_to_idx, entities_type &entities) |

Detailed Description

template<template< class > class Hasher = QUICKCPPLIB_NAMESPACE::algorithm::hash::fnv1a_hash, size_t HashIndexSize = 4096, class SpinlockType = QUICKCPPLIB_NAMESPACE::configurable_spinlock::shared_spinlock<>>

class llfio_v2_xxx::algorithm::shared_fs_mutex::memory_map< Hasher, HashIndexSize, SpinlockType >

Many entity memory mapped shared/exclusive file system based lock.

- Template Parameters

-

Hasher A STL compatible hash algorithm to use (defaults to fnv1a_hash)HashIndexSize The size in bytes of the hash index to use (defaults to 4Kb) SpinlockType The type of spinlock to use (defaults to a SharedMutexconcept spinlock)

This is the highest performing filing system mutex in LLFIO, but it comes with a long list of potential gotchas. It works by creating a random temporary file somewhere on the system and placing its path in a file at the lock file location. The random temporary file is mapped into memory by all processes using the lock where an open addressed hash table is kept. Each entity is hashed into somewhere in the hash table and its individual spin lock is used to implement the exclusion. As with byte_ranges, each entity is locked individually in sequence but if a particular lock fails, all are unlocked and the list is randomised before trying again. Because this locking implementation is entirely implemented in userspace using shared memory without any kernel syscalls, performance is probably as fast as any many-arbitrary-entity shared locking system could be.

As it uses shared memory, this implementation of shared_fs_mutex cannot work over a networked drive. If you attempt to open this lock on a network drive and the first user of the lock is not on this local machine, errc::no_lock_available will be returned from the constructor.

- Linear complexity to number of concurrent users up until hash table starts to get full or hashed entries collide.

- Sudden power loss during use is recovered from.

- Safe for multithreaded usage of the same instance.

- In the lightly contended case, an order of magnitude faster than any other

shared_fs_mutexalgorithm.

Caveats:

- No ability to sleep until a lock becomes free, so CPUs are spun at 100%.

- Sudden process exit with locks held will deadlock all other users.

- Exponential complexity to number of entities being concurrently locked.

- Exponential complexity to concurrency if entities hash to the same cache line. Most SMP and especially NUMA systems have a finite bandwidth for atomic compare and swap operations, and every attempt to lock or unlock an entity under this implementation is several of those operations. Under heavy contention, whole system performance very noticeably nose dives from excessive atomic operations, things like audio and the mouse pointer will stutter.

- Sometimes different entities hash to the same offset and collide with one another, causing very poor performance.

- Memory mapped files need to be cache unified with normal i/o in your OS kernel. Known OSs which don't use a unified cache for memory mapped and normal i/o are QNX, OpenBSD. Furthermore, doing normal i/o and memory mapped i/o to the same file needs to not corrupt the file. In the past, there have been editions of the Linux kernel and the OS X kernel which did this.

- If your OS doesn't have sane byte range locks (OS X, BSD, older Linuxes) and multiple objects in your process use the same lock file, misoperation will occur.

Requires

handle::current_path()to be working.- Todo:

- memory_map::_hash_entities needs to hash x16, x8 and x4 at a time to encourage auto vectorisation

Member Function Documentation

◆ fs_mutex_map()

|

inlinestaticnoexcept |

Initialises a shared filing system mutex using the file at lockfile.

- Errors returnable\n Awaiting the clang result<> AST parser which auto generates all the error codes which could occur,

- but a particularly important one is

errc::no_lock_availablewhich will be returned if the lock is in use by another computer on a network.

The documentation for this class was generated from the following file:

- include/llfio/v2.0/algorithm/shared_fs_mutex/memory_map.hpp