|

| void | triecheckvalidity (const key_type *tocheck=nullptr) const noexcept |

| |

| constexpr | bitwise_trie () |

| |

| template<class Arg , class... Args, typename std::enable_if<(std::is_constructible< Base, Arg, Args... >::value), bool >::type = true> |

| constexpr | bitwise_trie (Arg &&arg, Args &&...args) |

| |

| | bitwise_trie (const bitwise_trie &o) noexcept |

| |

| bitwise_trie & | operator= (const bitwise_trie &o) noexcept |

| |

| void | swap (bitwise_trie &o) noexcept |

| | Swaps the contents of the index.

|

| |

| QUICKCPPLIB_NODISCARD constexpr bool | empty () const noexcept |

| | True if the bitwise trie is empty.

|

| |

| constexpr size_type | size () const noexcept |

| | Returns the number of items in the bitwise trie.

|

| |

| constexpr size_type | max_size () const noexcept |

| | Returns the maximum number of items in the index.

|

| |

| reference | front () noexcept |

| | Returns the front of the index.

|

| |

| const_reference | front () const noexcept |

| | Returns the front of the index.

|

| |

| reference | back () noexcept |

| | Returns the back of the index.

|

| |

| const_reference | back () const noexcept |

| | Returns the back of the index.

|

| |

| iterator | begin () noexcept |

| | Returns an iterator to the first item in the index.

|

| |

| const_iterator | begin () const noexcept |

| | Returns an iterator to the first item in the index.

|

| |

| reverse_iterator | rbegin () noexcept |

| | Returns an iterator to the last item in the index.

|

| |

| const_reverse_iterator | rbegin () const noexcept |

| | Returns an iterator to the last item in the index.

|

| |

| const_iterator | cbegin () const noexcept |

| | Returns an iterator to the first item in the index.

|

| |

| const_reverse_iterator | crbegin () const noexcept |

| | Returns an iterator to the last item in the index.

|

| |

| iterator | end () noexcept |

| | Returns an iterator to the item after the last in the index.

|

| |

| const_iterator | end () const noexcept |

| | Returns an iterator to the item before the first in the index.

|

| |

| reverse_iterator | rend () noexcept |

| | Returns an iterator to the item before the first in the index.

|

| |

| const_reverse_iterator | rend () const noexcept |

| | Returns an iterator to the item before the first in the index.

|

| |

| const_iterator | cend () const noexcept |

| | Returns an iterator to the item after the last in the index.

|

| |

| const_reverse_iterator | crend () const noexcept |

| | Returns an iterator to the item before the first in the index.

|

| |

| constexpr void | clear () noexcept |

| | Clears the index.

|

| |

| size_type | count (key_type k) const noexcept |

| | Return how many items with key there are.

|

| |

| size_type | count (const_iterator it) const noexcept |

| | Return how many items with the same key as iterator there are.

|

| |

| iterator | insert (pointer p) |

| |

| iterator | erase (const_iterator it) noexcept |

| | Erases an item.

|

| |

| void | erase (pointer p) noexcept |

| | Erases an item.

|

| |

| iterator | erase (key_type k) noexcept |

| | Erases an item.

|

| |

| iterator | find (key_type k) const noexcept |

| | Finds an item.

|

| |

| iterator | find_equal_or_larger (key_type k, int64_t rounds) const noexcept |

| |

| iterator | find_equal_or_next_largest (key_type k) const noexcept |

| |

| iterator | upper_bound (key_type k) const noexcept |

| | Finds the item next larger than the key. This is equivalent to find_equal_or_next_largest(k + 1).

|

| |

| iterator | upper_bound_estimate (key_type k, int64_t rounds) const noexcept |

| | Estimate the item next larger than the key. This is equivalent to find_equal_or_larger(k + 1, rounds).

|

| |

| bool | contains (key_type k) const noexcept |

| | True if the index contains the key.

|

| |

| reference | operator[] (key_type k) noexcept |

| | Returns a reference to the specified element, aborting if key not found.

|

| |

| const_reference | operator[] (const key_type &k) const noexcept |

| | Returns a reference to the specified element, aborting if key not found.

|

| |

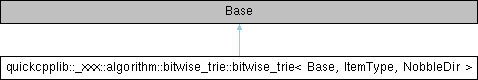

template<class Base, class ItemType, int NobbleDir = 0>

class quickcpplib::_xxx::algorithm::bitwise_trie::bitwise_trie< Base, ItemType, NobbleDir >

Never-allocating in-place bitwise Fredkin trie index head type.

- Template Parameters

-

| Base | The base type from which to inherit (and thus overlay the index member functions). |

| ItemType | The type of item indexed. |

| NobbleDir | -1 to nobble zeros, +1 to nobble ones, 0 to nobble both equally (see below). |

This uses the bitwise Fredkin trie algorithm to index a collection of items by an unsigned integral key (e.g. a size_t from std::hash), providing identical O(log2 N) time insertion, removal, and finds (i.e. insert, remove and find all take identical time). It is thus most like a red-black tree. However it has a number of very useful characteristics which make it invaluable in certain use cases.

Firstly, unlike a hash table, this algorithm requires no additional memory allocation whatsoever. It wholly and exclusively uses only the items added to the index, and the index head, for storage. This makes it invaluable for bootstrap type scenarios, such as in memory allocators or first boot of a kernel. It also works well with C++ exceptions globally disabled.

Secondly, unlike a hash table it provides a bounded time close fit find, which is particularly useful for where you need an item closely matching what you need, but you don't care if it's the closest matching item. An example of where this is super useful to have is in memory allocators, where you need a free block bigger than or equal to the size you are allocating.

There is also a guaranteed closest rather than closely matching item find, however it is more expensive than a red-black tree whose guaranteed sortedness makes finding exact upper bounds easy.

Thirdly, unlike any other algorithm, this one is much faster for low key values than large key values, so if you can keep most of your keys small, you will see more benefit.

As you can see, bitwise tries have most of the same benefits of red-black trees, but they approximate hash tables for performance of insert-find-remove-findclosefit on most CPUs. This makes them very compelling where red-black trees are too slow, or where some concurrency is desirable (concurrent modify is easy to implement for all keys whose topmost set bit differs).

The order of the items during iteration is somewhat sorted by key incrementing. Note the somewhat, it is fully ordered for each top bit set increment, but within each of those there is an interleave of insertion order and key increment. A bubble sort can be a good sort algorithm depending on relationship of key increment to insertion order, but you shouldn't assume it to be so without empirically checking.

Items inserted with the same key preserve order of insertion. Performance is great on all CPUs which have a single cycle opcode for finding the first set bit in a key. If your CPU has a slow bitscan opcode (e.g. older Intel Atom), performance is merely good rather than great.

The index is intrusive, as in, your types must provide the storage needed by the index for housekeeping. You can very tightly pack or compress or calculate those numbers by defining a specialised bitwise_trie_head_accessors<Base, ItemType> if you wish to customise storage of housekeeping for the trie index head; and/or specialise bitwise_trie_item_accessors<ItemType> if you wish to customise storage of housekeeping for each item indexed by the trie. The default implementations of those accessor types require various member variables prefixed with trie_ in your types:

- The default

bitwise_trie_head_accessors<Base, ItemType> requires the following member variables in the trie index head type:

<unsigned type> trie_countItemType *trie_children[8 * sizeof(<unsigned type>)]bool trie_nobbledir (if you use equal nobbling only)

- The default

bitwise_trie_item_accessors<ItemType> requires the following member variables in the trie item type:

ItemType *trie_parentItemType *trie_child[2]ItemType *trie_sibling[2] (if you allow multiple items with the same key value only)KeyType trie_key

Again, I stress that the above can be completely customised and packed tighter with custom accessor type specialisations for your type. The tigher you can pack your structures, the more fits into L3 cache, and the faster everything goes. You can also store these in a file, and store offset pointers which are safe when a memory map relocates in memory.

Close and closest fit finds always find an item whose key is larger or equal to the key sought. Lower bound is not implemented yet.

Most of this implementation is lifted from https://github.com/ned14/nedtries, but it has been modernised for current C++ idomatic practice.

Differences from a C++ container

As this is an index, not a container, the value type is always a pointer. I chose pointers instead of references to aid readability i.e. it is very clear from reading code using this index that it is an index not a container.

Because custom accessors may not store pointers as pointers, reference is not a reference, but also a pointer. Iteration thus yields pointers, not references.

Nobble direction

During item removal only, to keep the tree balanced one needs to choose which node to nobble. If for all the keys you use there is a surplus of zeros after the first set bit (this would be common for pointers), you should nobble zeros by setting the template parameter NobbleDir to -1. If for all the keys you use there is a surplus of ones after the first set bit, you should nobble ones by setting the template parameter NobbleDir to 1.

If for all the keys you use there is an equal balance between zeros and ones after the first set bit (this would be common for hashes), you will get the best results if you add state storage to keep a nobble direction boolean which flips between false and true such that nobbling is equally distributed. In this case, set NobbleDir to 0.

tl;dr; If your key results from a hash function, choose NobbleDir = 0. If your key results from a pointer, or is some number which clusters on regular even boundaries, choose NobbleDir = -1.

- Todo:

- Implement

lower_bound().